NuGet is one of those tools that gets more and more important all the time. A while back, it was useful for adding small, specialized packages for particular projects. Nowadays, it’s fundamental – and going to be even more so when vNext is live. We all use it to add packages, but how difficult is it to create and publish our own packages? As it turns out, not very difficult at all.

I’d been meaning to turn my data annotation validator into a NuGet package for some time – and today, I finally did something about it. This is how you do it:

1. Get nuget.exe

Go to nuget.org or nuget.codeplex.com and download nuget.exe. Once you’ve got it, save it somewhere nice and easy because you’re going to need to….

2. Add it to the path

Right click on ‘computer’ in windows explorer, select ‘properties’ and then ‘advanced system settings’. Then click on ‘Environment Variables’ in the dialog.

In the Environment Variables dialog, find the Path variable and click Edit.

In the next dialog, add a semi-colon at the end and then put in the full path to nuget.exe (in my case that was c:\Nuget):

3. Create a Nuspec file.

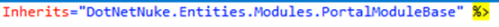

This is a little XML config/specification file used in packaging, and you generate it from the command line. Open a command window, navigate to the folder where you have your Visual Studio project file and type in:

Nuget spec

This, of course, is why you wanted nuget in the path. Nuget.exe will now generate the XML file using your assembly settings, and placing default text where it doesn’t have info. Here’s a raw one:

And here’s one that’s been edited:

4. Pack it ready for publishing

This is again done using the command line, adding an argument to tell it to package the release version, not the default (which is probably debug). I’ve put brackets around the part that you would need to replace with your own package:

nuget pack [DataAnnotationValidator.csproj] -Prop Configuration=Release

The result is another file, this time with the extension nupkg.

5. Publish it to Nuget

That means first you have to go there and sign up – which is all very straight-forward. Once you’re signed up and you’ve clicked on the link in the confirmation email, you will have an API key on your profile page, and that allows you to publish your package. Get your key and then run the following in your command window:

nuget setApiKey [key from profile page]

That means you won’t have to put in your key every time. Now go to the Upload Package page and browse for your nupkg file:

It asks you to verify the details….

And then that’s it – your package is online and available for download.

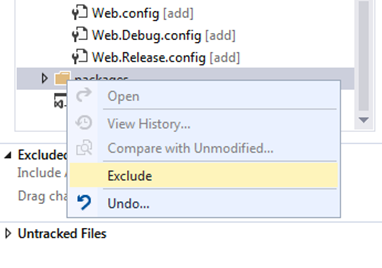

I installed the package to test it, and the config file was updated….

the reference was added…..

and the dll was in the bin directory:

And that allowed me to add the control to the toolbox and use it as part of my project:

Obviously, there’s a lot more you can do with NuGet – like adding support for multiple frameworks – and I may well look at some of the options in a future post.

For other related information, check out these courses from Learning Tree: